The National Institute of Standards and Technology, NIST, is an American institution responsible for overseeing innovation and industrial competitiveness. It has laboratories accountable for programs of all kinds, from basic science to engineering, and conducts periodic assessments of cutting-edge technologies and innovations.

How is NIST different from ISO?

NIST participates in developing international standards by being an active member of the International Organization of Standardization (ISO). Many evaluations performed by NIST establish the technical criteria that are then carried over into many ISO standards.

What role does NIST play in biometrics and identity verification?

NIST has been measuring and evaluating the performance of biometric systems for more than 60 years, from fingerprints to faces, voice, iris, palm prints, and more. These evaluations have given them unique insight into the strengths and weaknesses of different biometrics. It has also helped them establish what criteria technologies are ready for mass use in different situations, such as facial biometrics for identity verification.

Why is NIST considered the world's leading evaluator?

From the above, we deduce that NIST’s knowledge of biometrics is extensive, allowing it to define accurate and complete assessments. In addition, NIST has access to unique U.S. federal resources, which they use to prepare assessment scenarios with actual operational data. In addition, these are tremendously large data sets, allowing them to measure the performance of systems with an accuracy in the tens of thousands or even in the order of one error per million.

What biometric technologies does NIST evaluate, and what aspects of those technologies does it focus on?

Currently, NIST has facial, voice, fingerprint, iris, and DNA biometric assessments. These assessments focus on both verification and identification scenarios, with verification being understood as the confirmation or denial of an identity by comparing two biometric factors (e.g., two images of faces or two voices) against each other, or 1:1 comparison, and identification being the process of discovering an identity among a collection or set of people (e.g., set of images or voices), also known as a 1:N comparison.

Specifically, facial biometrics evaluations focus on the metric called False Non-Match Rate (FNMR) for 1:1 or False Negative Identification Rate (FNIR) for 1:N. Those metrics measure the percentage of times that an operation with a “reliable” image fails to be verified or positively identified, i.e., the number of times the system gives a negative result for a known person when it should be positive.

The other relevant metric in facial biometrics is the False Match Rate (FMR) for 1:1 or the False Positive Identification Rate (FPIR) for 1:N. In this case, those metrics measure the percentage of times that an operation with an image of an “impostor” is verified or positively identified, that is, the times that for a person who should be unknown, the system gives a positive response. These metrics allow knowing the convenience of use of the system (the funnel) and its security (robustness against impostors).

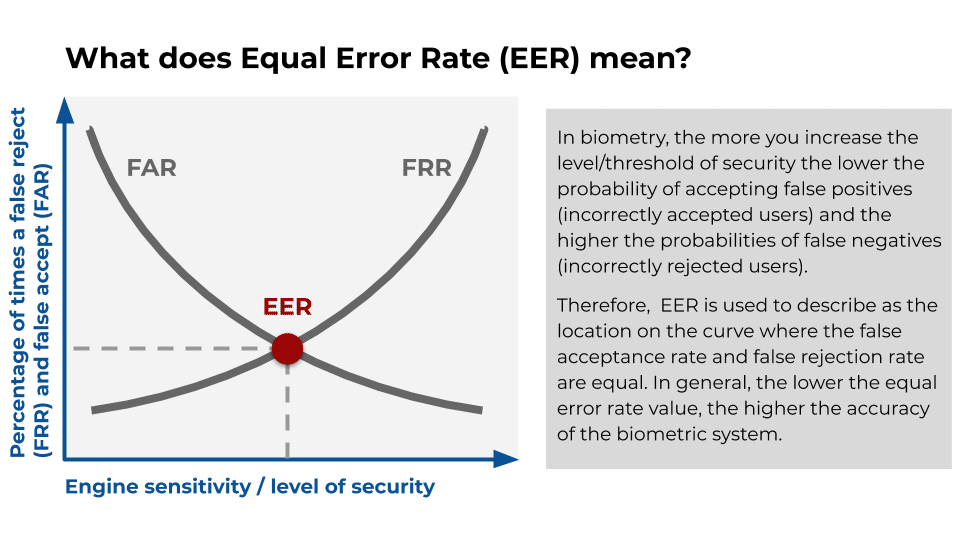

On the other hand, when we talk about voice biometric evaluations, also known as Speaker Recognition Evaluation (SRE), and focusing on the latest editions (SRE19, SRE20, and SRE 21), the metric being used is a Primary Metric. This Primary Metric consists of a basic cost model to measure speaker verification performance defined as a weighted sum of False Rejection Rate (FRR) and False Accept Rate (FAR) probabilities, giving more weight to the latter in the weighting (FAR is given 30 times more weight than FRR).

NIST imposes this weighting, which may differ from the interests of each use case, so when comparing systems in the commercial environment, it is often preferred to use the “Equal Error Rate” (EER or equal error rate). The EER is the place on the curve where the false acceptance rate and false rejection rate are equal.

What databases does NIST use to validate biometric engines?

NIST evaluates biometric engines on different databases that resemble different production cases.

Looking exclusively at facial biometrics evaluations, databases are sets of photographs of faces used to measure systems’ performance in different assessments. Different databases are used at NIST, for example:

- Visa – Consists of photographs taken in compliance with ISO/ICAO standards for facial photography. This would correspond to photos used for passports or identity documents.

- Mugshot – Built with photographs taken less strictly according to Visa standards. They are usually photographs taken by the police while searching for convicts in prisons.

- Wild – Compiles photographs without any control and may come from news articles, newspapers, sporting events, etc.

- Border – Consists of photographs taken at border control facilities, typically airports.

Source: Image taken from NIST FRVT 1:1 report (https://pages.nist.gov/frvt/reports/11/frvt_11_report.pdf)

For voice biometrics, the philosophy is different. Instead of clearly defined datasets with which NIST evaluates the various facial biometrics engines, the organization first provides a dataset just for training the algorithms, then delivers another small dataset for refinement or finetuning, and finally distributes the evaluation dataset with many more audios.

The training and evaluation datasets are changed annually within the NIST voice evaluations. They usually change the language, channel, number of speakers, and even databases for multimodal biometrics.

What types of evaluations exist, and how often are they conducted?

Regarding facial biometrics, there is the Face Recognition Vendor Test (FRVT), which is a compendium of continuous assessments with no end date:

- FRVT 1:1 – Evaluates facial verification systems using evaluation protocols where pairs of images labeled as “trustworthy” (same person) or “impostor” (faces of different people) comparisons are compared.

- FRVT 1:N – Evaluates facial identification systems using protocols where there is a database of known persons and searching for persons known to be in that database (positive expected result search) and for persons known not to be there (negative expected result searches).

- FRVT Quality – Evaluates algorithms for measuring the quality of a photograph taken of a face. Currently, quality is calculated as a predictor of the ability of a biometric system to give a correct response with an image. Therefore, the procedures used in this evaluation measure the correlation between the predictor of image quality and the system’s accuracy with those images.

- FRVT Morph – Evaluates the ability to detect Morphing attacks. In this attack, the impostor makes a mixture of two photographs, one of him and another of a different person, getting the police to print a passport or identity card using that mixed photo. Then the impostor uses it to impersonate the other person, for example, at borders, airports, etc.

- FRVT PAD – Evaluates presentation attacks (impersonation). The presentation attacks evaluated consist of showing physical artifacts (paper masks, photographs on screens, realistic latex masks, etc.) to pass off the artifact as a real live captured sample of the person you want to replace. They also evaluate situations of identity concealment, in which the impostor does not seek to look like anyone. Instead, the impostor tries to enroll in the system with a captured face that can not be related to his real face.

What are the requirements for submitting a system for a NIST evaluation?

This section also has differences between facial and voice biometric engine evaluations. If we focus on the former, the FRVT requires the submission of a system that can be deployed in a commercial application, and the system must be compiled with an API (in C++) offered by NIST. The system is provided to the organizers confidentially and free of charge, while participation in the evaluation is also free. Finally, participants submit a version of their algorithms, and NIST evaluates them with data that NIST does not share.

On the contrary, if we talk about the SRE, the organization sends the training and evaluation data, and the participants have to send the comparison result so that only NIST can evaluate the outcome. Therefore, here the participants can bring into play all the capacity and resources they deem appropriate to obtain the best possible result (fusion of different systems, calibrations, etc.) since, among other things, they do not have to send the algorithm as such to NIST.

Participation in SRE is open to anyone who finds the evaluation of interest and can comply with the evaluation rules outlined in the evaluation plan. While there is also no cost to participate in SRE21 (i.e., the assessment, data, web platform, and scoring software will be available free of charge), NIST requires that participating teams be represented at the post-assessment workshop.

It should be stressed that, unlike facial biometrics, the objective of voice evaluation is not to compare commercial solutions (it is not a vendor test) but to obtain an insight into state of the art and to promote its evolution within the scientific community. For this reason, the organization of the SRE allows the anonymity of the participants (which are generally made up of teams of different types such as universities, laboratories, technological institutes, companies, etc.), as well as establishing strong restrictions on the use of the results obtained by the industry.

Why is it essential that NIST evaluates different biometric technology providers?

There is a regulatory gap in ensuring that biometric systems behave as expected technically. Some certifications allow systems to be tested, such as ISO-30107, written to evaluate the ability to detect presentation attacks in facial biometrics. But none of these standards give a binding certification level but only provide conformity to an evaluation methodology.

Consequently, standards are being developed in Europe and internationally to fill this gap. And this is where NIST is playing an essential role in filling the gap by offering a unique way to compare competitors under the same conditions.

What challenges will NIST address regarding the future of biometrics?

NIST discusses three main problems regarding facial biometrics engines:

- Demographic differentials – This is commonly known as “biases,” and it is hoped that they will continue to emphasize analyzing the state of art in terms of these differentials and comparing the evolution of the systems over time concerning this issue.

- Biometrics with twins – Twins represent about 4% of the population in the USA, so there is a lot of interest in improving the performance of biometric systems in this regard. The technology is not yet ready, but NIST poses challenges of this type so that technology developers are encouraged to participate and show the actual state of their systems with twins.

- Morphing – NIST already has a concrete evaluation related to this aspect. They will probably work more on this topic, making special reports that will allow us to know how the morphing detection technology is evolving. It is true that the technology still needs to be ready for satisfactory exploitation in a product. Still, it is a significant problem that states face, especially in the passage of citizens across borders between countries.

Veridas present at NIST face and voice biometrics evaluations

In line with our faithful commitment to transparency and regulation of biometric technologies, at Veridas, we periodically submit our facial biometrics and voice biometrics engines to NIST evaluations. And we do it because, today, NIST is the best reference to know which systems are competitive and valuable in production and which do not achieve the expected results.

At Veridas, we submit to NIST only those biometric engines we then deliver to real customers for production use, avoiding possible adjustments that other players might exploit to get the best results with systems that never actually make it to market. And yet, at Veridas, we always get fantastic results across all categories, ranking among the best biometric engines in the world.

Specifically, as of November 2022, Veridas ranks in the top 20% of companies in the world in facial biometrics evaluations (FVRT 1:1), being the 5th best European company in this respect. As for voice biometrics, NIST regulations prevent us from communicating our exact position, although it is worth noting that Veridas is considered among the best systems in the world in a very prominent way.

Thanks to this continuous commitment to excellence, we already have first-class clients in more than 25 countries. Customers who have opted for certified, transparent, and fully automated technology. Technology made in Veridas.

Paco Zamora

Face Biometrics expert

Santiago Prieto

Voice Biometrics expert

Ramón Fernandez

Biometrics expert

![[DEMO GRATUITA]: Descubre cómo funciona nuestra tecnología en vivo](png/77fe6e3f-1266-4bbd-bedb-a6e94d72729b.png)

![[FREE DEMO]: Find out how our technology works live](png/9d2f027d-2e80-4f2b-8103-8f570f0ddc7c.png)